Decoding GCP Serverless: Your 2025 Guide to Cloud Functions & Cloud Run Timeout Limits

The serverless landscape on Google Cloud Platform (GCP) is powerful, but navigating its evolution can be tricky. Developers often find themselves wrestling with documentation referencing “gen1” vs. “gen2” Cloud Functions, leading to critical confusion around fundamental limits like execution timeouts.

This guide provides a definitive analysis of the current timeout limits for Google Cloud Functions and Google Cloud Run. More importantly, we’ll uncover the foundational relationship between these services—the key to mastering Google’s modern serverless ecosystem.

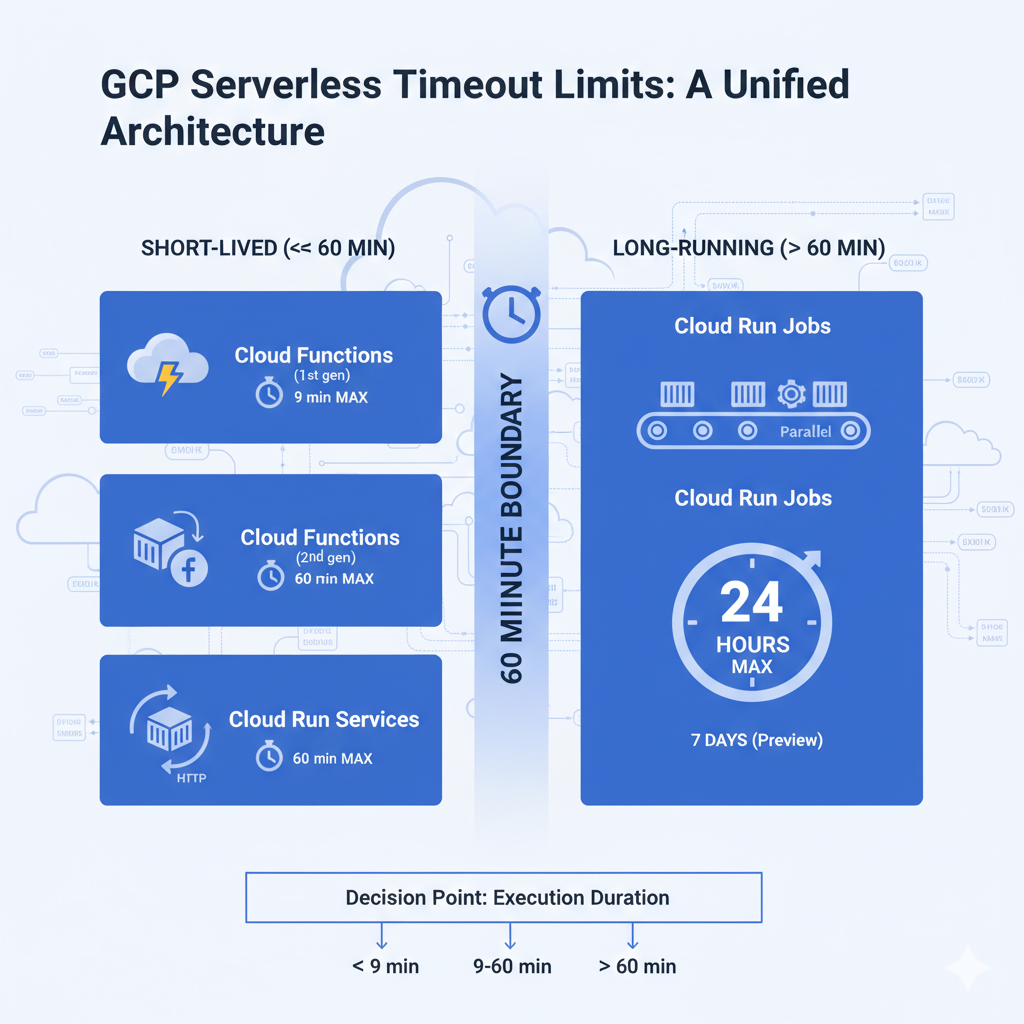

The central concept you need to grasp is this: Google’s serverless strategy has converged on Cloud Run.

A 2nd generation Cloud Function is not just an update; it’s a strategic re-platforming. Under the hood, a Cloud Function (2nd gen) is a containerized application deployed as a Cloud Run service, with its event triggers managed by Eventarc. This architectural unification explains why their capabilities and limits are aligning. The choice is no longer between different technologies, but between different levels of abstraction on the same powerful, container-based foundation.

Let’s dissect each product to give you a clear, actionable framework for your next project.

Google Cloud Functions — A Tale of Two Generations

Cloud Functions provides a serverless environment to run simple, single-purpose functions attached to events. But its two generations have fundamentally different architectures and, therefore, different timeout limits.

The Original Paradigm: Cloud Functions (1st gen)

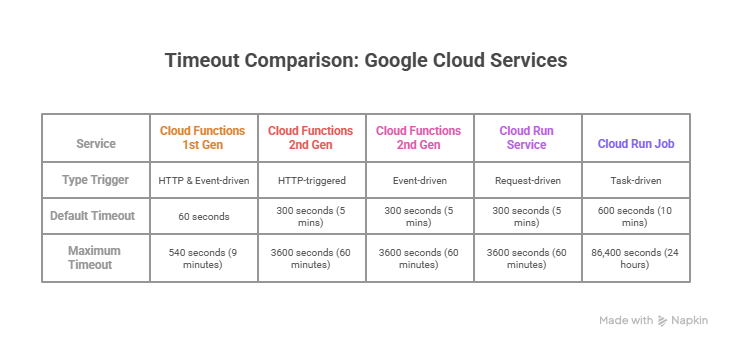

Cloud Functions (1st gen) is the classic Function-as-a-Service (FaaS) model. You write your code, zip it up, and Google’s managed environment handles the rest. It’s designed around two function types:

- HTTP Functions (Synchronous): Invoked directly by an HTTP request. Ideal for webhooks and lightweight APIs.

- Background Functions (Asynchronous): Triggered by events from other GCP services, like a file upload to Cloud Storage or a message on a Pub/Sub topic.

Timeout Limit Analysis (1st gen)

The execution duration for 1st gen functions is strictly limited. While the default is 60 seconds, the platform imposes a hard, non-negotiable upper limit.

- Maximum Timeout: 540 seconds (9 minutes)

Any function exceeding this 9-minute limit will be forcibly terminated. This is a critical architectural constraint. For longer tasks, you must break down the work into smaller chunks or trigger a process on a different long-running compute service, like a Cloud Run Job.

The Evolution: Cloud Functions (2nd gen)

Cloud Functions (2nd gen) is a complete re-platforming onto two core GCP services: Cloud Run for execution and Eventarc for event triggering.

When you deploy a 2nd gen function, Cloud Build automatically packages your code into a standard container image and deploys it as a Cloud Run service. This means a 2nd gen function inherits the core capabilities—and limits—of the Cloud Run platform, offering improved concurrency, larger instance sizes, and much longer request processing times.

Timeout Limits Demystified (2nd gen)

The move to a Cloud Run foundation dramatically expands the timeout limits, solving a major pain point of the 1st gen platform.

- Default Timeout: 300 seconds (5 minutes)

- Maximum Timeout (HTTP & Event-driven): 3600 seconds (60 minutes)

That’s right—both HTTP and event-driven 2nd gen functions now support a full one-hour execution time. Early in its lifecycle, event-driven 2nd gen functions were limited to 9 minutes, but as the underlying Eventarc integration with Cloud Run has matured, that artificial boundary has been removed. This brings total parity and makes the choice between a 2nd gen function and a raw Cloud Run service one of developer experience, not execution time (for workloads under 60 minutes).

Google Cloud Run — The Universal Container Platform

Cloud Run is Google’s fully managed platform for running stateless containers. It is the engine that powers Cloud Functions (2nd gen), and understanding its components is essential. It is split into two distinct products for different kinds of workloads.

Cloud Run Services: For Request-Driven Workloads

A Cloud Run Service is designed to run containers that serve traffic, such as web applications, APIs, and microservices. It’s defined by its request-based autoscaling, scaling up with traffic and, crucially, scaling down to zero when idle to save costs.

Request Timeout Analysis

The timeout for a Cloud Run service defines how long the service has to process a request and return a response before the request is terminated with a 504 Gateway Timeout error.

- Default Timeout: 300 seconds (5 minutes)

- Maximum Timeout: 3600 seconds (60 minutes)

This 60-minute limit is the hard ceiling for any single, synchronous request handled by a Cloud Run service.

Cloud Run Jobs: For Finite, Long-Running Tasks

In contrast to services, Cloud Run Jobs are for workloads that run to completion and then terminate. They don’t serve requests; they execute a task and exit. This makes them the perfect solution for asynchronous, long-running, and batch processing tasks like data migrations, report generation, or ML model training.

Task Timeout Analysis

The timeout for a Cloud Run Job applies to each individual container instance (task) executing the job.

- Default Timeout: 600 seconds (10 minutes)

- Maximum Timeout: 86,400 seconds (24 hours)

- Preview Timeout: Support for up to 168 hours (7 days) is available in Preview.

This massive increase in potential runtime is a deliberate architectural decision. Google’s platform design clearly signals that any process needing more than an hour should be asynchronous. The intended pattern is to have a short-lived function or service receive a request and then launch a long-running Cloud Run Job to do the heavy lifting.

Comparative Analysis and Architectural Guidance

Let’s bring it all together into a clear summary and decision framework.

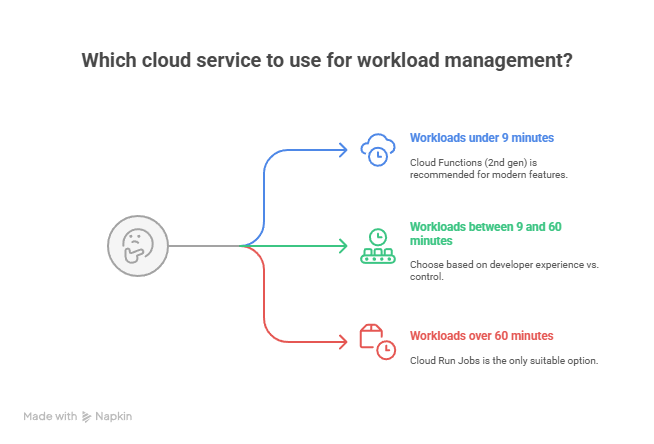

Selecting the Right Tool: A Decision Framework

The most critical factor is your workload’s expected runtime.

- Workloads under 9 minutes: All options are on the table. Cloud Functions (1st gen) can still be a simple choice for basic event-driven logic, but for new projects, starting with 2nd gen is highly recommended for its modern features.

- Workloads between 9 and 60 minutes: Cloud Functions (1st gen) is eliminated. Your choice is between Cloud Functions (2nd gen) and Cloud Run Services. Since the timeout is identical, decide based on developer experience vs. control:

- Choose Cloud Functions (2nd gen) for a simplified, source-code-focused experience where Google manages the containerization for you. It’s faster for deploying event-driven business logic.

- Choose Cloud Run Services when you need direct control over the container environment, require a custom

Dockerfile, want to run sidecar containers, or need advanced traffic splitting.

- Workloads over 60 minutes: Cloud Run Jobs is the only correct choice. Any other approach is an anti-pattern. Your architecture must be designed to asynchronously trigger a Cloud Run Job to handle the long-running task.

Conclusion: A Unified Serverless Strategy

The confusion around GCP’s serverless timeouts disappears when you understand the platform’s architectural convergence on Cloud Run. It is the foundation, with Cloud Functions (2nd gen) acting as a high-productivity abstraction layer on top.

This creates a clear architectural boundary at the 60-minute mark. This limit guides you to separate short-lived, request-driven workloads from long-running, asynchronous batch tasks.

For your next GCP project, follow this strategic guidance:

- Default to Cloud Functions (2nd gen) for most new serverless, event-driven tasks. It hits the sweet spot of power, modern features, and developer productivity.

- Escalate to Cloud Run Services when you need deep control over the container, build process, or deployment strategy.

- Utilize Cloud Run Jobs for any task that runs longer than 60 minutes. It is the purpose-built tool for robust, scalable, long-running execution.

By following this framework, you can build efficient, scalable, and architecturally sound applications, leveraging the full power of the modern GCP serverless ecosystem.