Strategic Object Storage Comparison: AWS S3, Azure Blob, and GCP Cloud Storage for Enterprise Workloads

I. Executive Summary: The Strategic Object Storage Triad

Object storage has evolved from a simple repository for unstructured data into the foundational layer for modern data architectures, including data lakes, artificial intelligence/machine learning (AI/ML) pipelines, and global enterprise archives. The three dominant hyperscale providers—Amazon Simple Storage Service (S3), Microsoft Azure Blob Storage, and Google Cloud Storage (GCS)—offer compelling solutions, yet their underlying architectures, pricing mechanisms, and unique feature sets compel strategic data engineers and cloud architects to move beyond mere per-gigabyte (GB) cost comparisons. The choice is fundamentally about architectural fit, expected access patterns, and minimizing Total Cost of Ownership (TCO) volatility driven by operations and retrieval fees.

I.A. Core Value Propositions

Each platform maintains a distinct strategic advantage tailored to specific organizational needs:

- AWS Simple Storage Service (S3): S3 is widely regarded as the industry standard, known for its mature feature set, maximum flexibility, and unparalleled ecosystem integration. Its vast range of specialized storage classes, including S3 Express One Zone for ultra-low latency computing, and its seamless connectivity with AWS analytics services like Athena, Redshift, and EMR, make it the default choice for data lake architectures built within the AWS ecosystem.

- Azure Blob Storage: This service excels in environments deeply invested in the Microsoft enterprise stack, offering strong synergy with Azure services such as Azure Synapse, Data Factory, and Data Lake Storage Gen2 (ADLS Gen2). ADLS Gen2, built upon Blob Storage, provides a crucial Hierarchical Namespace (HNS), which improves the performance of metadata operations and file system semantics required by big data frameworks like Hadoop and Spark. Azure’s robust hybrid cloud capabilities also appeal to organizations maintaining substantial on-premises infrastructure.

- GCP Cloud Storage (GCS): GCS differentiates itself through a performance-centric architecture that guarantees strong global consistency. Its most unique value proposition is maintaining uniform millisecond latency across all its storage classes, from the frequently accessed Standard tier to the deepest Archive tier. This architectural decision transforms long-term archival data from a passive compliance asset into an active, instantly analyzable resource.

I.B. High-Level TCO Summary and Key Trade-offs

The financial evaluation of object storage services must extend beyond monthly storage fees to encompass access costs—specifically operations (PUT/GET requests), data retrieval from cold tiers, and network egress (data transfer out). A marginal difference in at-rest storage price can be instantly negated by high retrieval fees or exorbitant read operation charges.

The primary trade-off is observed between cost efficiency and latency guarantees:

- AWS S3 offers the lowest overall absolute archival cost via S3 Glacier Deep Archive, priced at approximately $0.00099 per GB/month. However, this necessitates accepting retrieval latency measured in hours.

- GCP GCS, conversely, eliminates retrieval latency entirely, making all data instantly available, but requires accepting a slightly higher storage cost and significantly higher retrieval fees for accessing its colder tiers (up to $0.05 per GB retrieved from Archive Storage).

- Azure offers competitive pricing and latency characteristics that align closely with AWS for equivalent redundancy levels.

II. Foundational Architecture, Consistency, and Ecosystem Maturity

The fundamental architectural design choices made by each provider determine their suitability for enterprise-scale workloads, particularly regarding data reliability and integration complexity.

II.A. Data Durability and Consistency Guarantees

All three major providers commit to exceptional data durability, a non-negotiable standard in object storage. AWS S3, Azure Blob, and GCP GCS all guarantee 99.999999999% (eleven nines) annual durability, meaning the probability of object loss is virtually zero over a typical 10-million-object deployment. This durability is achieved through redundant storage across multiple devices and facilities within a region or across multiple regions.

Regarding data consistency, subtle differences impact complex distributed applications:

- AWS S3 traditionally adhered to an eventual consistency model for object overwrites and deletions, although AWS has significantly improved its consistency guarantees to provide strong read-after-write consistency for PUTs of new objects.

- GCP Cloud Storage is designed with inherent strong global consistency. This means that once a write operation is confirmed, all subsequent read operations across all regions are guaranteed to see the latest version of the data immediately. For modern data lakes and high-velocity analytics where sequential data integrity is paramount, this strong global consistency is a crucial architectural advantage.

II.B. API Compatibility and Vendor Ecosystem Lock-in

The complexity and maturity of the Application Programming Interface (API) heavily influence migration paths and third-party tool integration.

The AWS S3 API has achieved status as the industry-standard interface for object storage. Its extensive maturity and widespread adoption ensure maximum integration with virtually all backup solutions, cloud management tools, and cloud-native applications. Organizations already leveraging the AWS ecosystem benefit from seamless integration with services like EC2, Lambda, and Athena.

GCP Cloud Storage has acknowledged the dominance of the S3 API by developing robust interoperability features. GCS provides native compatibility with S3-centric tools and libraries via its XML API. Users can simplify migrations by merely changing the request endpoint to the Cloud Storage URI (

https://storage.googleapis.com) and configuring the tool to use GCP’s HMAC keys. Furthermore, the

gcloud command-line interface (CLI) allows users to manage objects in Amazon S3 buckets directly, demonstrating Google’s commitment to facilitating multi-cloud workflows.

Azure Blob Storage uses its own proprietary Azure Blob API. Consequently, Azure Blob Storage does not provide native S3 compatibility out-of-the-box. Organizations aiming to migrate existing applications designed for the S3 API to Azure must rely on third-party solutions, such as Flexify.IO, which acts as an S3-to-Azure proxy, transparently translating API calls on-the-fly. This lack of native S3 compatibility represents a measurable hurdle for organizations seeking to adopt Azure storage without modifying extensive application codebases.

II.C. Data Protection and Immutability (WORM Compliance)

All three providers offer essential features for maintaining data governance and compliance through immutability, also known as Write-Once-Read-Many (WORM) storage. These features are critical for regulatory compliance (e.g., healthcare, finance) and increasingly vital for modern ransomware protection.

- AWS S3 Object Lock is the established industry solution, blocking permanent object deletion or overwriting during a customer-defined retention period.

- Azure Blob Storage provides equivalent Immutable Storage capabilities to enforce retention policies.

- GCP Cloud Storage utilizes a Retention Policy to achieve the same WORM compliance objective.

III. The Performance Paradigm: Latency Tiers and Architectural Differentiation

The comparison of object storage performance reveals the most significant architectural differences between the three services. AWS and Azure employ a traditional tiered latency model, while GCP leverages its proprietary infrastructure to create a uniform performance experience.

III.A. GCP’s Unique Value Proposition: Uniform Millisecond Latency (The Colossus Advantage)

GCP Cloud Storage fundamentally changes the economics of archival data retrieval by maintaining low, millisecond retrieval times across its entire spectrum of storage classes: Standard, Nearline, Coldline, and Archive.

This uniform access speed is possible because the GCS service is underpinned by Google’s proprietary, high-performance zonal cluster-level file system, Colossus. Colossus utilizes sophisticated techniques, including optimized SSD placement and a stateful gRPC-based streaming protocol, to deliver sub-millisecond read/write latency at massive scale.

The implication of this architecture is profound: latency transitions from a variable metric to a consistent, strategic feature. Data residing in GCS Archive Storage, the coldest and lowest-cost tier, remains instantly available for querying by services like BigQuery, eliminating the need to wait hours for retrieval jobs to complete. This facilitates the use case of active analytical archives, where vast datasets retained for compliance or long-term backup can be instantly accessed for unanticipated analytical needs, effectively turning passive storage into active compute-ready storage.

III.B. AWS and Azure Tiered Latency Models

AWS and Azure adhere to a tiered latency model where cost savings in storage capacity are directly exchanged for increased retrieval time and potential costs.

The AWS S3 ecosystem offers millisecond latency across its Standard, Intelligent-Tiering, and Archive Instant Access tiers. However, the deepest archival tiers—S3 Glacier Flexible Retrieval and S3 Glacier Deep Archive—are engineered purely for cost efficiency. S3 Glacier Deep Archive, the lowest cost cloud storage available ($0.00099/GB) , requires retrieval times measured in minutes for expedited access up to 12 hours for bulk retrievals.

AWS addresses the need for specialized, low-latency performance through S3 Express One Zone. This specialized class offers data access speeds up to 10 times faster than S3 Standard, with consistent single-digit millisecond latency. S3 Express One Zone is optimized for transactional processing, AI/ML, and HPC, often co-located with compute resources for optimal performance. Crucially, S3 Express One Zone is a single-Availability Zone (AZ) class, meaning it is not comparable to the multi-AZ standard for universal archival data, but rather a dedicated performance segment.

Azure Blob Storage similarly segments performance. Hot, Cool, and Cold tiers offer millisecond retrieval. However, the

Archive tier is an offline tier optimized for maximum cost savings, accepting latency requirements typically measured on the order of hours. For high-performance, transactional requirements, Azure provides Premium block blobs, which deliver lower and more consistent latency using high-performance SSD disks.

III.C. Comparative Retrieval Times for Coldest Tiers

The decision between performance and price becomes acutely clear when comparing the retrieval characteristics of the coldest tiers, which dictates whether the data is active or passive.

IV. Storage Tier Pricing Comparison: Analyzing At-Rest Costs

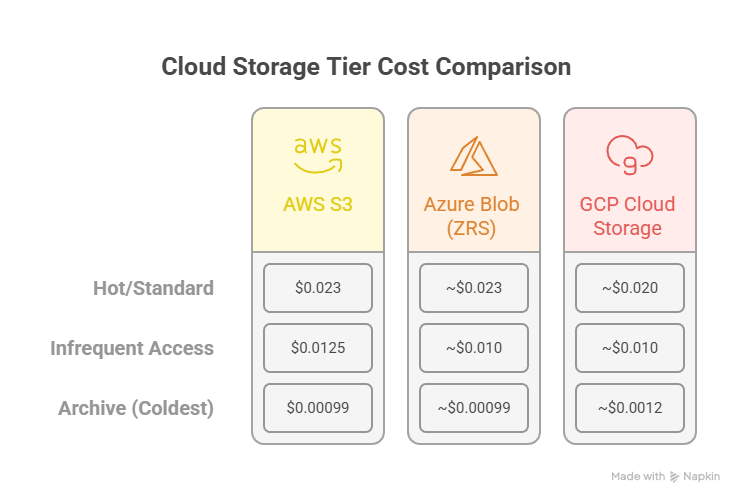

Pricing in object storage is influenced by three main factors: volume stored (at-rest cost), access frequency (operations and retrieval fees), and network transfer (egress). Analyzing at-rest storage costs, assuming North American region pricing, reveals competitive alignment but important nuance regarding redundancy.

IV.A. Hot/Standard Storage Tier Pricing (0–50 TB)

The initial pricing for frequently accessed (hot) data is a core competitive benchmark.

- AWS S3 Standard provides a clear starting point at $0.023 per GB/month for the first 50 TB, with prices scaling downward for larger volumes (down to $0.021/GB beyond 500 TB). S3 Standard is multi-AZ redundant by default.

- Azure Blob Hot presents a highly competitive headline price of approximately $0.0184 per GB/month for the first 50 TB, assuming Locally Redundant Storage (LRS).

- GCP Cloud Storage Standard typically sits close to S3, priced around $0.020 per GB/month.

A critical consideration for strategic planners is the difference in default redundancy levels. The lower headline price for Azure Hot LRS ($0.0184/GB) provides resilience within a single datacenter. However, achieving Zone-Redundant Storage (ZRS), which offers durability equivalent to the default S3 Standard or GCS Standard multi-region configuration, raises the Azure cost to approximately $0.023 per GB/month. Therefore, for enterprise-grade, highly resilient data, the at-rest costs of the hot tiers are essentially at parity across all three major providers when redundancy levels are equalized.

IV.B. Infrequent Access and Mid-Cold Tiers (30/90-Day Minimums)

For data that is infrequently accessed, such as log files, secondary backups, or archived documents accessed less than once a month, providers offer tiers grouped around the $0.01/GB mark, typically requiring a 30-day minimum storage duration to prevent early deletion penalties.

- AWS S3 Standard-Infrequent Access (IA) is priced at $0.0125 per GB/month, with a required 30-day minimum duration. AWS also offers S3 One Zone-IA at $0.01/GB for data that does not require multi-AZ resilience.

- Azure Cool is priced around $0.01 per GB/month, also requiring a 30-day minimum retention period.

- GCP Nearline provides comparable pricing at approximately $0.01 per GB/month and requires a 30-day minimum retention period.

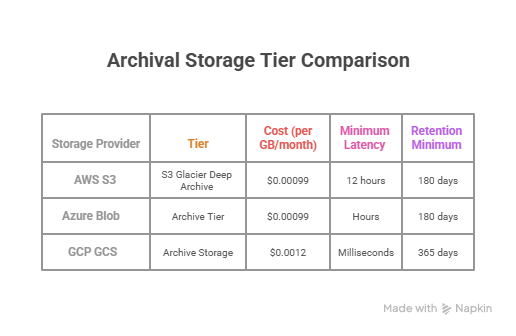

IV.C. Deep Archive Tier Pricing and Duration Penalties

The coldest tiers are designed for long-term retention and compliance, maximizing cost savings.

The at-rest storage costs for the deepest archives are nearly identical: AWS S3 Glacier Deep Archive and Azure Archive are both priced at approximately $0.00099 per GB/month. GCP Archive is slightly higher at $0.0012 per GB/month.

However, the cost advantage of these tiers is inextricably linked to mandatory minimum storage durations. Retrieving or deleting data prior to this period triggers pro-rated early deletion charges. AWS Deep Archive and Azure Archive typically enforce a 180-day minimum duration. GCS Archive imposes the longest minimum duration requirement, at 365 days. This extended duration means that although GCP Archive offers instant retrieval, premature deletion incurs a greater penalty compared to its competitors.

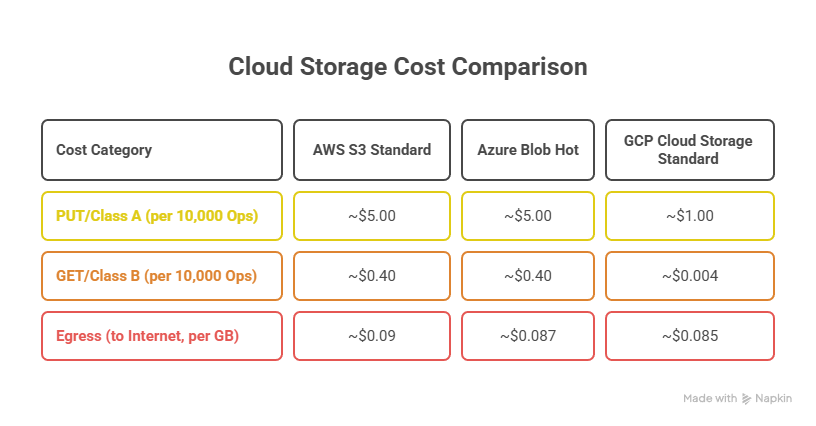

V. Total Cost of Ownership (TCO) Drivers: Operations, Retrieval, and Egress

The Total Cost of Ownership is determined primarily by how data is accessed and moved, not merely how much data is stored. Volatility in TCO is almost always traced back to operations, retrieval, and network egress charges.

V.A. Operations Charges (PUT, GET, LIST Transactions)

Object storage providers charge per transaction (or operation), categorized typically into Class A (write, configuration, list operations) and Class B (read/GET operations).

For modern distributed applications, data lakes, and microservices architectures that generate millions of small data reads, operational costs are a dominant variable. The analysis reveals a stark architectural cost disparity in favor of Google Cloud Storage for read-heavy workloads.

AWS S3 and Azure Blob Hot tiers typically charge approximately $0.40 per 10,000 GET (read) requests. In contrast, GCP Cloud Storage Standard charges Class B operations as low as $0.004 per 10,000 operations. This is an operational cost difference of approximately

100 times cheaper for reads on GCP.

This significant operational cost reduction in GCP fundamentally alters the TCO calculation for applications involving high-throughput querying, log processing, or analytical engines that generate a large volume of GET requests. In such scenarios, GCS’s reduced operational expense quickly dwarfs any marginal price advantage AWS or Azure might hold in per-GB storage cost, leading to a drastically lower overall TCO for I/O-intensive data platforms. For Class A (write) operations, S3 and Azure are closely aligned at roughly $5.00 per 10,000 operations, while GCP’s Class A operations are significantly cheaper at around $1.00 per 10,000 operations.

V.B. Data Retrieval Fees (The Cold Storage Tax)

Data retrieval fees are imposed when objects stored in cold or archive tiers are accessed. This fee serves as a necessary deterrent for using archive tiers for anything other than truly infrequent access.

Retrieval fees vary based on the tier’s intended latency:

- AWS S3 Standard-IA (Infrequent Access) incurs a retrieval fee of $0.01 per GB.

- GCP Cloud Storage uses retrieval fees as the primary cost adjustment for its uniform low latency access model. The fees scale higher as the storage cost decreases: Nearline is $0.01/GB retrieved, Coldline is $0.02/GB, and Archive Storage is the highest at $0.05 per GB retrieved.

The high retrieval fee for GCS Archive ($0.05/GB) must be strategically balanced against its millisecond latency guarantee. If an organization stores 100 TB in GCS Archive, retrieving just 1 TB means incurring $50 in retrieval fees. For use cases where data retrieval is frequent, even if low volume, the instantaneous access provided by GCS comes at a substantially higher cost than AWS or Azure. However, if instantaneous retrieval is mandatory for business continuity or opportunistic analytics, the added cost of the fee is compensated by the elimination of the hours-long waiting period imposed by AWS Glacier or Azure Archive.

V.C. Network Egress Costs (The Largest Hidden Cost)

Network egress, or Data Transfer Out (DTO) to the public internet, remains the most substantial variable and often the largest hidden cost in cloud services, functioning as a primary mechanism of vendor stickiness. All three hyperscale providers charge similar, substantial rates for data leaving their network.

Published egress rates for the first terabyte (TB) transferred monthly are tightly grouped: AWS charges approximately $0.09 per GB, Azure charges around $0.087 per GB, and GCP charges roughly $0.085 per GB.

Organizations must strategically manage egress by utilizing Content Delivery Networks (CDNs) or by leveraging the free allowances provided (e.g., AWS and Azure typically offer 100 GB of free egress per month, while GCP offers 0–200 GB free per month, depending on location). The consistency of high egress costs across all providers underscores the financial importance of careful architectural design that minimizes data transfer across network boundaries.

VI. Strategic Selection Guide and Recommendations

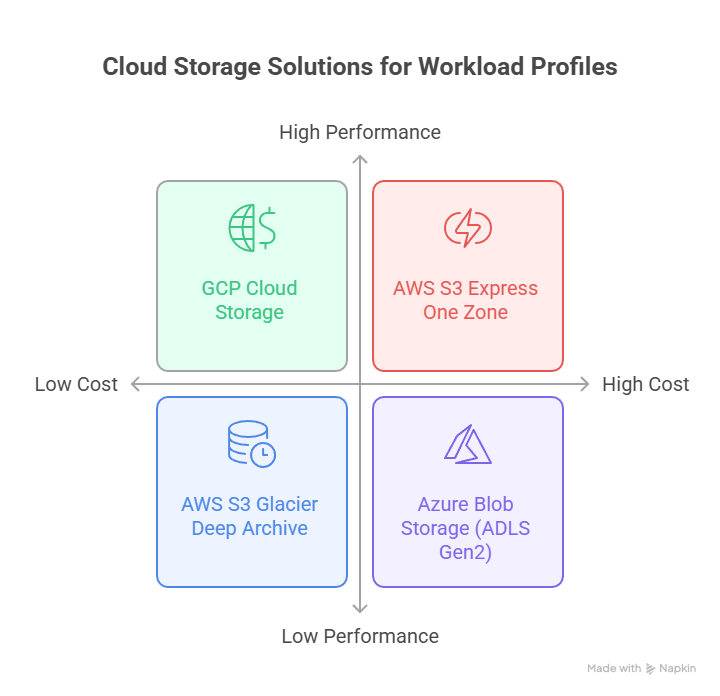

The optimal object storage platform is dictated by the specific technical and financial profile of the target workload, prioritizing architectural fit over marginal cost advantages in storage capacity.

VI.A. Workload Suitability Matrix

VI.B. Automated Tiering for Cost Optimization

All major providers offer solutions to manage data lifecycle costs dynamically:

- AWS S3 Intelligent-Tiering: This feature offers the most mature automatic cost optimization tool. It automatically moves objects between Frequent Access, Infrequent Access, and Archive Instant Access tiers based on observed access patterns. Crucially, all three of these Intelligent-Tiering destination tiers maintain the same high performance and low latency of S3 Standard, ensuring cost savings without performance degradation.

- GCP Autoclass: GCP also offers the Autoclass feature, which automates transitions between Standard, Nearline, Coldline, and Archive. A significant financial advantage of Autoclass is that it waives the standard retrieval fees for objects moved between tiers, mitigating the primary TCO risk associated with accessing cold GCS data.

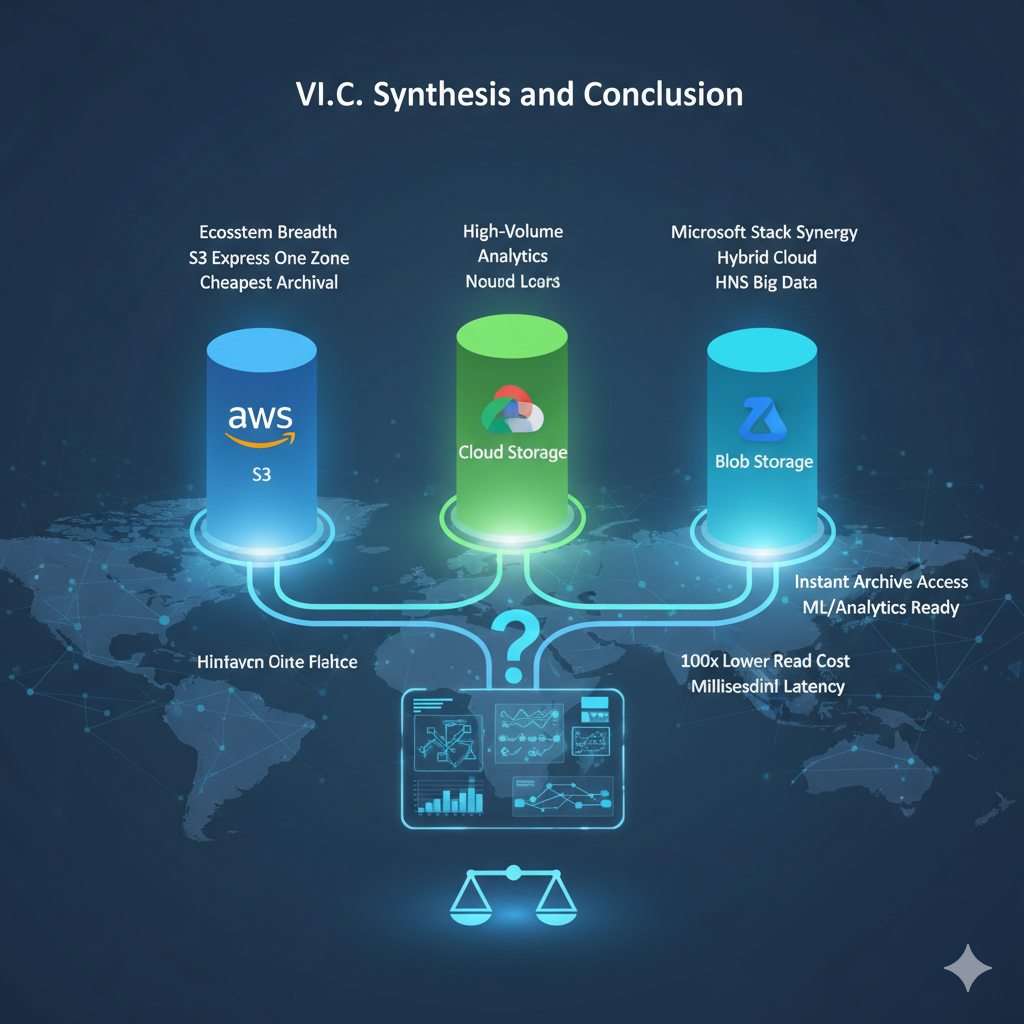

VI.C. Synthesis and Conclusion

The choice among AWS S3, Azure Blob Storage, and GCP Cloud Storage is a nuanced architectural decision reflecting the specific characteristics of the data pipeline.

For organizations prioritizing ecosystem breadth, specialized performance extremes (S3 Express One Zone), or absolute cheapest archival storage, AWS S3 remains the most mature and flexible choice. Its wide feature set and third-party compatibility are unmatched.

For organizations tightly integrated within the Microsoft stack, or those requiring robust hybrid cloud and HNS capabilities for big data, Azure Blob Storage offers seamless synergy and powerful native tooling.

However, for enterprises building modern data lakes optimized for high-volume analytics, or those that require archived data to be instantly accessible for future machine learning and analytical workloads, GCP Cloud Storage provides a compelling and distinct architectural advantage. The combination of strong global consistency and the 100x lower cost of read operations for Standard storage, paired with uniform millisecond latency across all tiers, fundamentally reframes archival strategy, shifting it from passive retention to active, low-latency analysis.